Analysing Eye Gaze Patterns during Confusion and Errors in Human–Agent Collaborations

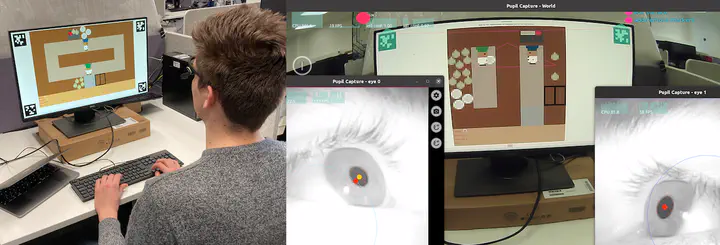

Experiment Setup

Experiment Setup

Abstract

As human–agent collaborations become more prevalent, it is increasingly important for an agent to be able to adapt to their collaborator and explain their own behavior. In order to do so, they need to be able to identify critical states during the interaction that call for proactive clarifications or behavioral adaptations. In this paper, we explore whether the agent could infer such states from the human’s eye gaze for which we compare gaze patterns across different situations in a collaborative task. Our findings show that the human’s gaze patterns significantly differ between times at which the user is confused about the task, times at which the agent makes an error, and times of normal workflow. During errors the amount of gaze towards the agent increases, while during confusion the amount towards the environment increases. We conclude that these signals could tell the agent what and when to explain.