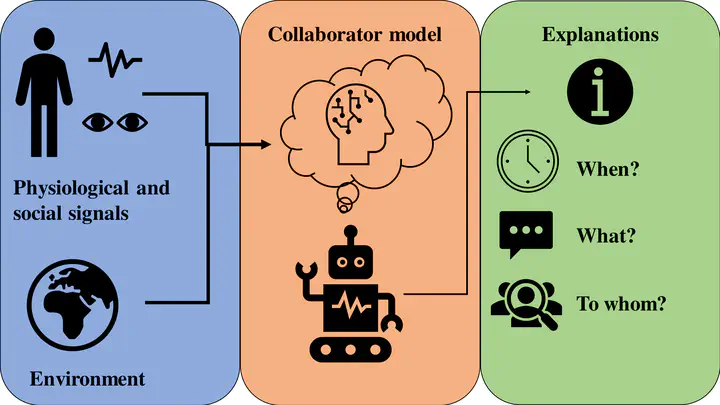

A robot considering its collaborators social and physiological signals in order to plan when and what to explain

A robot considering its collaborators social and physiological signals in order to plan when and what to explain

Abstract

As we will increasingly encounter robots in our everyday lives, engage with them in social interactions, and collaborate on common tasks, it is important we endow them with the capability of adapting to our abilities and preferences. Moreover, we want them to be able to explain the decisions they make in collaborative tasks to maximise rapport and build trust and acceptance. Current explanations are missing a focus on the individual user’s needs, which is why we want to learn when and what to explain in a collaboration. This paper proposes the roadmap that we plan to implement with the goal of inferring the user’s mental state from physiological and social signals in order to inform explanation generation and robot adaptation. We present preliminary results utilizing eye gaze and a planned framework that allows a collaborative robot to adapt to its human collaborator and tailor its explanations to them in order to minimise the confusion in an interaction.