A Survey of Evaluation Methods and Metrics for Explanations in Human–Robot Interaction (HRI)

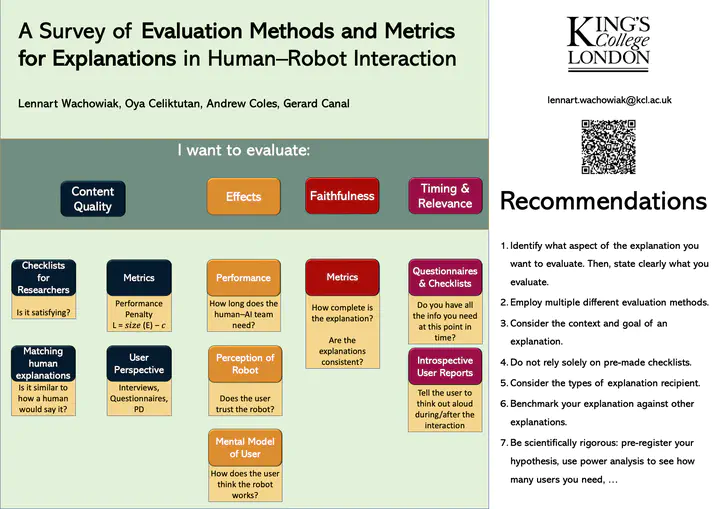

A poster providing a paper overview

A poster providing a paper overview

Abstract

The crucial role of explanations in making AI safe and trustworthy was not only recognized by the machine learning community but also by roboticists and human–robot interaction researchers. A robot that can explain its actions is supposed to be better perceived by the user, be more reliable, and seem more trustworthy. In collaborative scenarios, explanations are often expected to even improve the team’s performance. To test whether a developed explanation-related ability meets these promises, it is essential to rigorously evaluate them. Due to the many aspects of explanations that can be evaluated, and their varying importance in different circumstances, a plethora of evaluation methods are available. In this survey, we provide a comprehensive overview of such methods while discussing features and considerations unique to explanations given during human–robot interactions.