A Time Series Classification Pipeline for Detecting Interaction Ruptures in HRI Based on User Reactions

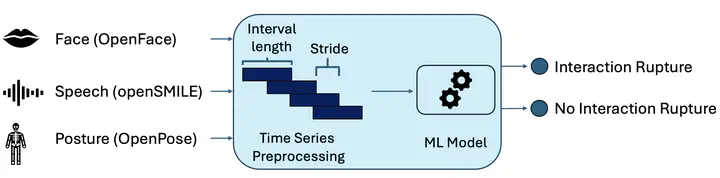

Pipeline overview

Pipeline overview

Abstract

To be able to react to interaction ruptures such as errors, a robot needs a way of realizing such a rupture occurred. We test whether it is possible to detect interaction ruptures from the user’s anonymized speech, posture, and facial features. We showcase how to approach this task, presenting a time series classification pipeline that works well with various machine learning models. A sliding window is applied to the data and the continuously updated predictions make it suitable for detecting ruptures in real-time. Our best model, an ensemble of MiniRocket classifiers, is the winning approach to the ICMI ERR@HRI challenge. A feature importance analysis shows that the model heavily relies on speaker diarization data that indicates who spoke when. Posture data, on the other hand, impedes performance.