Neurosymbolic Explanation Selection in Robotics: Combining the Strengths of Planning and Foundation Models for XAI

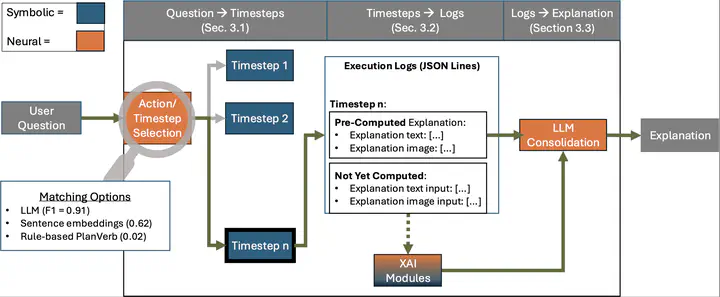

An overview of the neurosymbolic explanation selection pipeline.

An overview of the neurosymbolic explanation selection pipeline.

Abstract

Robots operating in human environments should be able to answer diverse, explanation-seeking questions about their past behavior. We present a neurosymbolic pipeline that links a task planner with a unified logging interface, which attaches heterogeneous XAI artifacts (e.g., visual heatmaps, navigation feedback) to individual plan steps. Given a natural language question, a large language model selects the most relevant actions and consolidates the associated logs into a multimodal explanation. In an offline evaluation on 180 questions across six plans in two domains, we show that an LLM-based question matcher retrieves relevant plan steps accurately (F1 Score of 0.91), outperforming a lower-compute embedding baseline (0.62) and a rule-based syntax/keyword matcher (0.02). A preliminary user study (N=30) suggests that users prefer the LLM-consolidated explanations over raw logs and planner-only explanations.